Which of the following hyperparameter optimization methods automatically makes informed selections of hyperparameter values based on previous trials for each iterative model evaluation?

Which of the following statements describes a Spark ML estimator?

A machine learning engineer has identified the best run from an MLflow Experiment. They have stored the run ID in the run_id variable and identified the logged model name as "model". They now want to register that model in the MLflow Model Registry with the name "best_model".

Which lines of code can they use to register the model associated with run_id to the MLflow Model Registry?

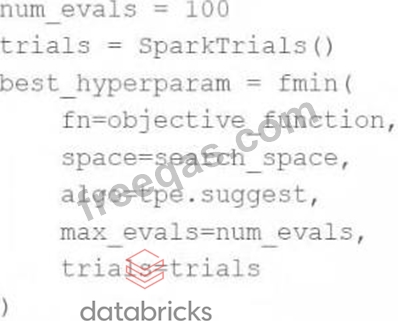

A data scientist wants to tune a set of hyperparameters for a machine learning model. They have wrapped a Spark ML model in the objective function objective_function and they have defined the search space search_space.

As a result, they have the following code block:

Which of the following changes do they need to make to the above code block in order to accomplish the task?

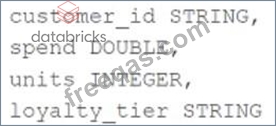

A data scientist is working with a feature set with the following schema:

The customer_id column is the primary key in the feature set. Each of the columns in the feature set has missing values. They want to replace the missing values by imputing a common value for each feature.

Which of the following lists all of the columns in the feature set that need to be imputed using the most common value of the column?

Enter your email address to download Databricks.Databricks-Machine-Learning-Associate.v2025-12-03.q26 Dumps