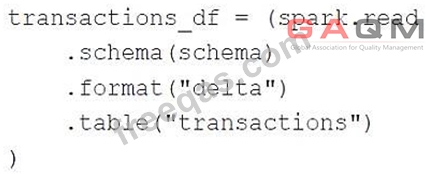

A data engineer is using the following code block as part of a batch ingestion pipeline to read from a composable table:

Which of the following changes needs to be made so this code block will work when the transactions table is a stream source?

A data engineer has a Job with multiple tasks that runs nightly. Each of the tasks runs slowly because the clusters take a long time to start.

Which of the following actions can the data engineer perform to improve the start up time for the clusters used for the Job?

Which of the following SQL keywords can be used to convert a table from a long format to a wide format?

Which of the following Structured Streaming queries is performing a hop from a Silver table to a Gold table?

An engineering manager wants to monitor the performance of a recent project using a Databricks SQL query.

For the first week following the project's release, the manager wants the query results to be updated every minute. However, the manager is concerned that the compute resources used for the query will be left running and cost the organization a lot of money beyond the first week of the project's release.

Which of the following approaches can the engineering team use to ensure the query does not cost the organization any money beyond the first week of the project's release?

Enter your email address to download GAQM.Databricks-Certified-Data-Engineer-Associate.v2024-09-16.q91 Dumps