You are working on a process to load external CSV files into a delta table by leveraging the COPY INTO command, but after running the command for the second time no data was loaded into the table name, why is that?

1.COPY INTO table_name

2.FROM 'dbfs:/mnt/raw/*.csv'

3.FILEFORMAT = CSV

You are looking to process the data based on two variables, one to check if the department is supply chain or check if process flag is set to True

A Databricks job has been configured with 3 tasks, each of which is a Databricks notebook. Task A does not depend on other tasks. Tasks B and C run in parallel, with each having a serial dependency on Task A.

If task A fails during a scheduled run, which statement describes the results of this run?

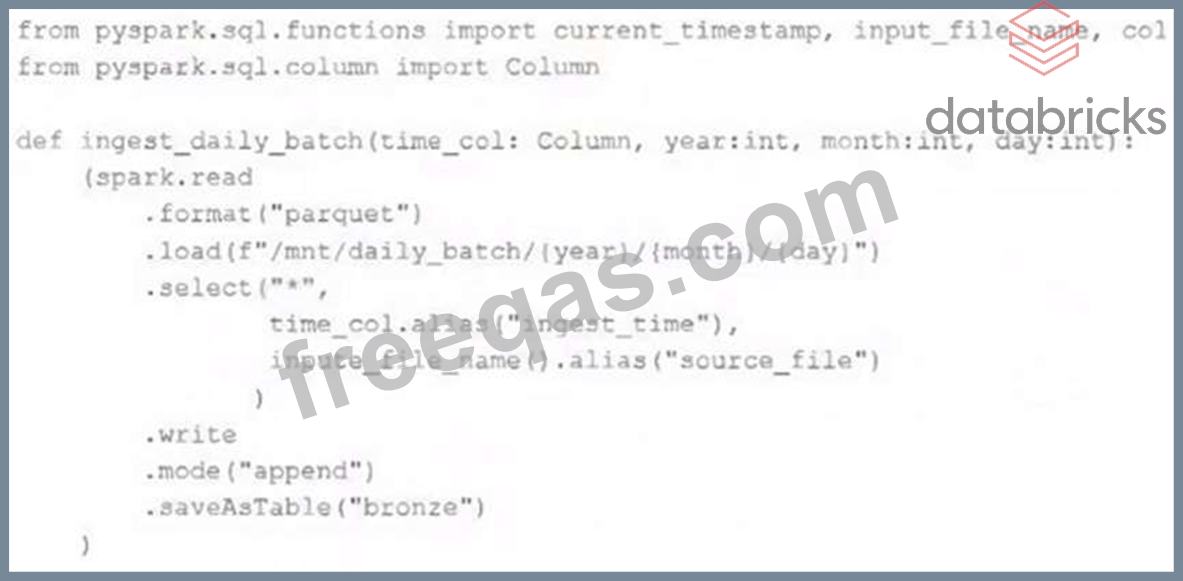

A nightly job ingests data into a Delta Lake table using the following code:

The next step in the pipeline requires a function that returns an object that can be used to manipulate new records that have not yet been processed to the next table in the pipeline.

Which code snippet completes this function definition?

def new_records():

Which statement describes Delta Lake optimized writes?

Enter your email address to download Databricks.Databricks-Certified-Professional-Data-Engineer.v2024-05-28.q108 Dumps