A data scientist is working with a Spark DataFrame called customerDF that contains customer information. The DataFrame has a column named email with customer email addresses. The data scientist needs to split this column into username and domain parts.

Which code snippet splits the email column into username and domain columns?

A developer needs to produce a Python dictionary using data stored in a small Parquet table, which looks like this:

The resulting Python dictionary must contain a mapping of region-> region id containing the smallest 3 region_idvalues.

Which code fragment meets the requirements?

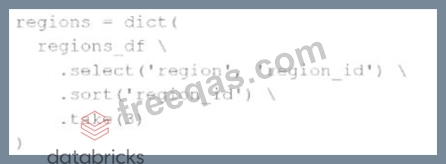

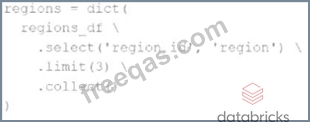

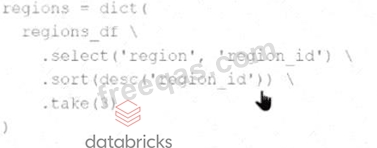

A)

B)

C)

D)

The resulting Python dictionary must contain a mapping ofregion -> region_idfor the smallest

3region_idvalues.

Which code fragment meets the requirements?

A Spark engineer must select an appropriate deployment mode for the Spark jobs.

What is the benefit of using cluster mode in Apache Spark™?

An engineer has a large ORC file located at /file/test_data.orc and wants to read only specific columns to reduce memory usage.

Which code fragment will select the columns, i.e., col1, col2, during the reading process?

30 of 55.

A data engineer is working on a num_df DataFrame and has a Python UDF defined as:

def cube_func(val):

return val * val * val

Which code fragment registers and uses this UDF as a Spark SQL function to work with the DataFrame num_df?

Enter your email address to download Databricks.Associate-Developer-Apache-Spark-3.5.v2025-11-20.q72 Dumps