A developer is working with a pandas DataFrame containing user behavior data from a web application.

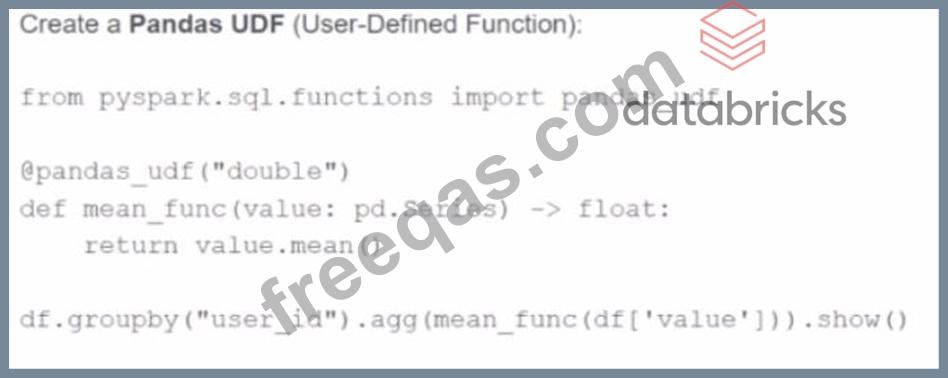

Which approach should be used for executing a groupBy operation in parallel across all workers in Apache Spark 3.5?

A)

Use the applylnPandas API

B)

C)

Given:

python

CopyEdit

spark.sparkContext.setLogLevel("<LOG_LEVEL>")

Which set contains the suitable configuration settings for Spark driver LOG_LEVELs?

A data engineer needs to write a Streaming DataFrame as Parquet files.

Given the code:

Which code fragment should be inserted to meet the requirement?

A)

B)

C)

D)

Which code fragment should be inserted to meet the requirement?

A data scientist at a financial services company is working with a Spark DataFrame containing transaction records. The DataFrame has millions of rows and includes columns fortransaction_id,account_number, transaction_amount, andtimestamp. Due to an issue with the source system, some transactions were accidentally recorded multiple times with identical information across all fields. The data scientist needs to remove rows with duplicates across all fields to ensure accurate financial reporting.

Which approach should the data scientist use to deduplicate the orders using PySpark?

A data engineer has been asked to produce a Parquet table which is overwritten every day with the latest data. The downstream consumer of this Parquet table has a hard requirement that the data in this table is produced with all records sorted by the market_time field.

Which line of Spark code will produce a Parquet table that meets these requirements?

Enter your email address to download Databricks.Associate-Developer-Apache-Spark-3.5.v2025-11-20.q72 Dumps