A Data Analyst needs to retrieve employees with 5 or more years of tenure.

Which code snippet filters and shows the list?

A data engineer needs to persist a file-based data source to a specific location. However, by default, Spark writes to the warehouse directory (e.g., /user/hive/warehouse). To override this, the engineer must explicitly define the file path.

Which line of code ensures the data is saved to a specific location?

Options:

A data engineer observes that an upstream streaming source sends duplicate records, where duplicates share the same key and have at most a 30-minute difference inevent_timestamp. The engineer adds:

dropDuplicatesWithinWatermark("event_timestamp", "30 minutes")

What is the result?

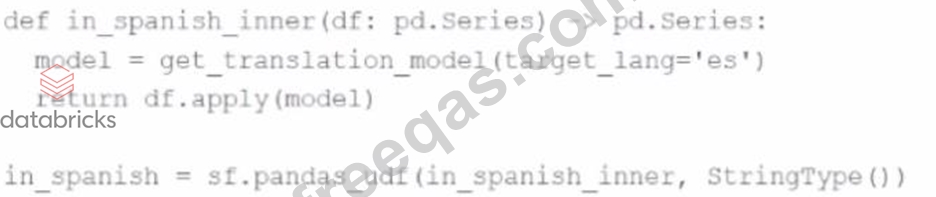

An MLOps engineer is building a Pandas UDF that applies a language model that translates English strings into Spanish. The initial code is loading the model on every call to the UDF, which is hurting the performance of the data pipeline.

The initial code is:

def in_spanish_inner(df: pd.Series) -> pd.Series:

model = get_translation_model(target_lang='es')

return df.apply(model)

in_spanish = sf.pandas_udf(in_spanish_inner, StringType())

How can the MLOps engineer change this code to reduce how many times the language model is loaded?

A Spark application developer wants to identify which operations cause shuffling, leading to a new stage in the Spark execution plan.

Which operation results in a shuffle and a new stage?

Enter your email address to download Databricks.Associate-Developer-Apache-Spark-3.5.v2025-11-20.q72 Dumps