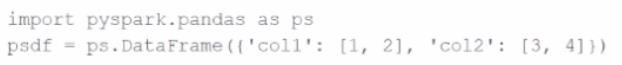

Given the code fragment:

import pyspark.pandas as ps

psdf = ps.DataFrame({'col1': [1, 2], 'col2': [3, 4]})

Which method is used to convert a Pandas API on Spark DataFrame (pyspark.pandas.DataFrame) into a standard PySpark DataFrame (pyspark.sql.DataFrame)?

A data scientist is analyzing a large dataset and has written a PySpark script that includes several transformations and actions on a DataFrame. The script ends with acollect()action to retrieve the results.

How does Apache Spark™'s execution hierarchy process the operations when the data scientist runs this script?

A data engineer is streaming data from Kafka and requires:

Minimal latency

Exactly-once processing guarantees

Which trigger mode should be used?

45 of 55.

Which feature of Spark Connect should be considered when designing an application that plans to enable remote interaction with a Spark cluster?

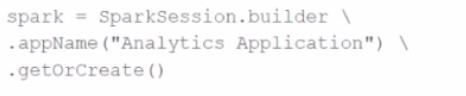

A developer initializes a SparkSession:

spark = SparkSession.builder \

.appName("Analytics Application") \

.getOrCreate()

Which statement describes the spark SparkSession?

Enter your email address to download Databricks.Associate-Developer-Apache-Spark-3.5.v2025-11-20.q72 Dumps